Multimodal model for motion generateion

Train and delploy billion-parameter level language-motion multimodal models that generate realistic 3D human motions from text.

I am an AI Research Scientist specializing in developing cutting-edge algorithms and models to advance AI system capabilities. At Intel, I have led multiple research projects focused on developing multimodal LLMs that integrate language, vision, and physics, enabling richer understanding and interaction with the world. My work also includes optimizing training recipes to improve LLM performance and inference efficiency.

Prior to this, I received my PhD from Penn State, where I was advised by Prof. Bo Cheng. My dissertation work was at the intersection of robot learning and agile locomotion. During my graduate studies, I was fortunate to intern at Intel Labs and MathWorks.

I’ve had the privilege of collaborating with some of the most talented researchers and engineers, including Vladlen Koltun, German Ros, and Alan Fern, and I am always grateful for that experience.

Recent projects in LLM, multimodal model and robotics.

Train and delploy billion-parameter level language-motion multimodal models that generate realistic 3D human motions from text.

Learn control polices for physically realistic bipedal locomotion for humanoid robot, and transfer them to real robots.

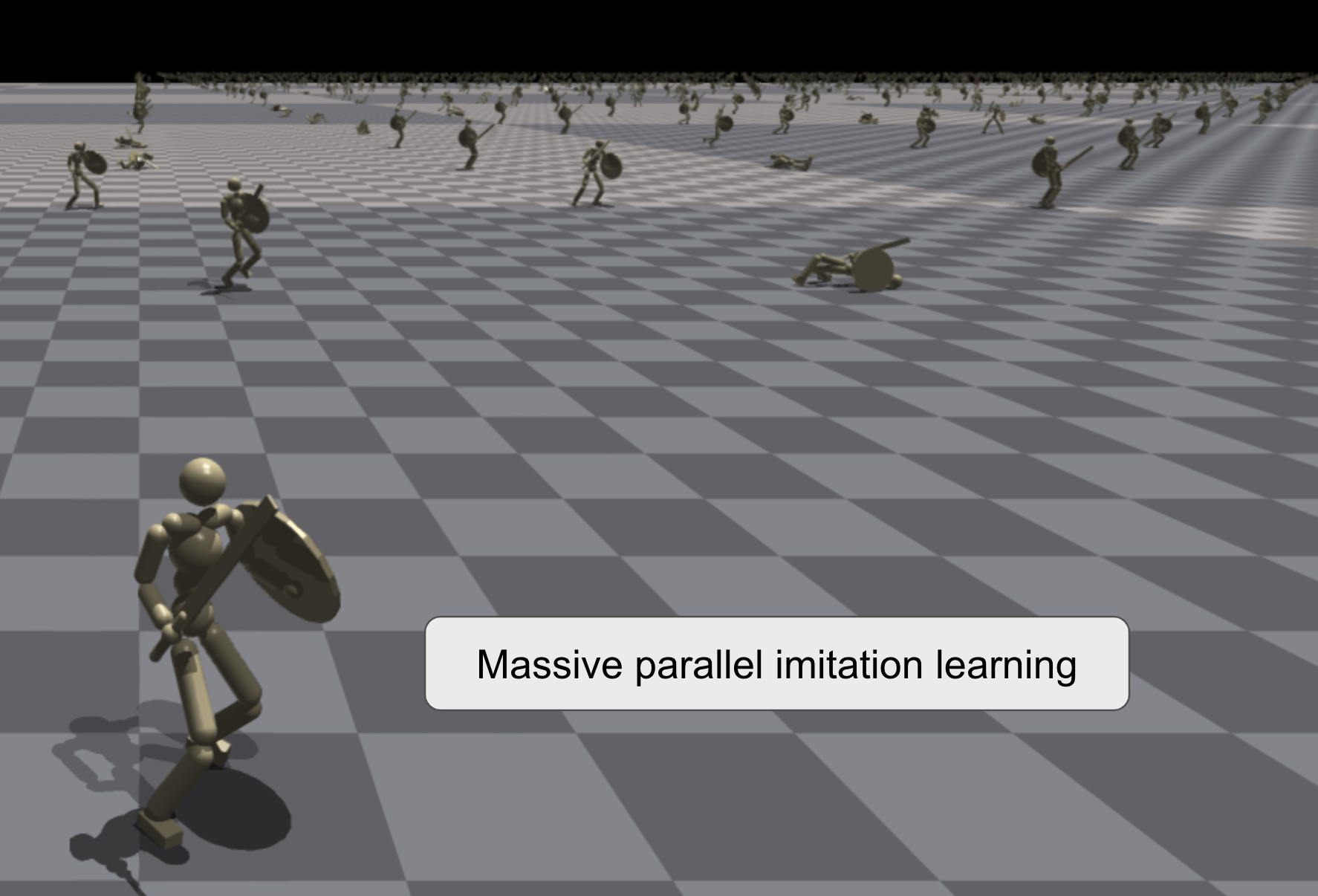

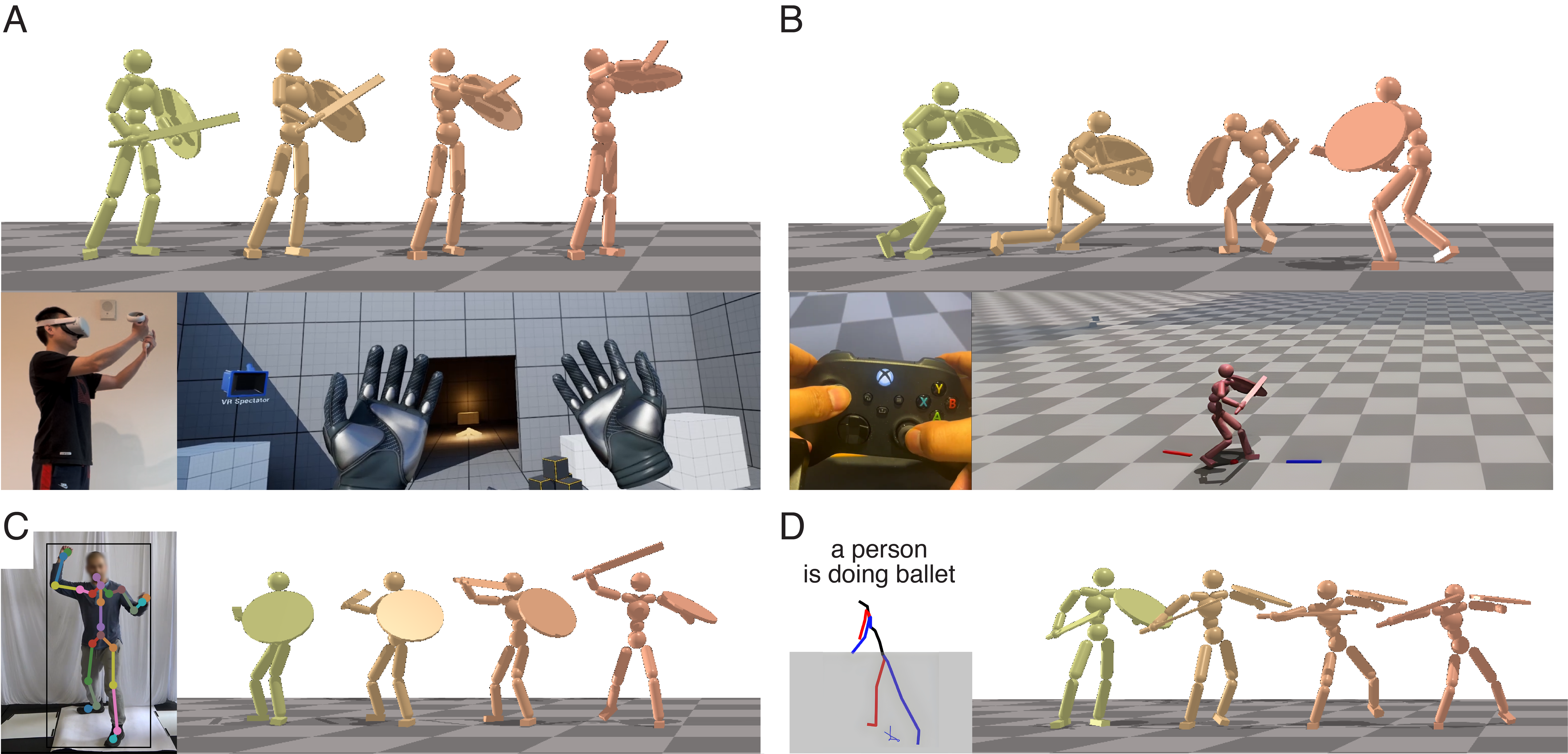

Develop an imitation learning framework that learns diverse human motions using massively parallel simulation

(* equal contribution)

Keywords: humanoid motion generation, multimodal, imitation

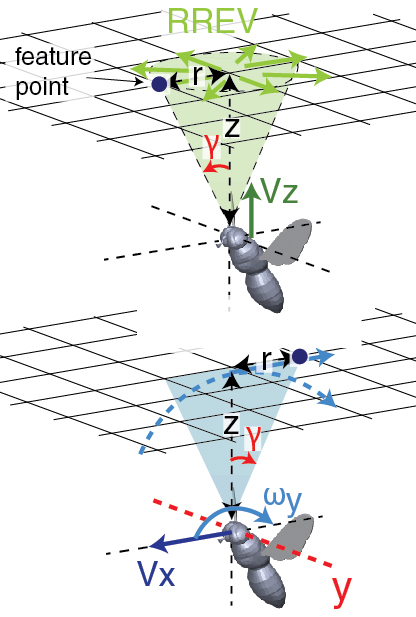

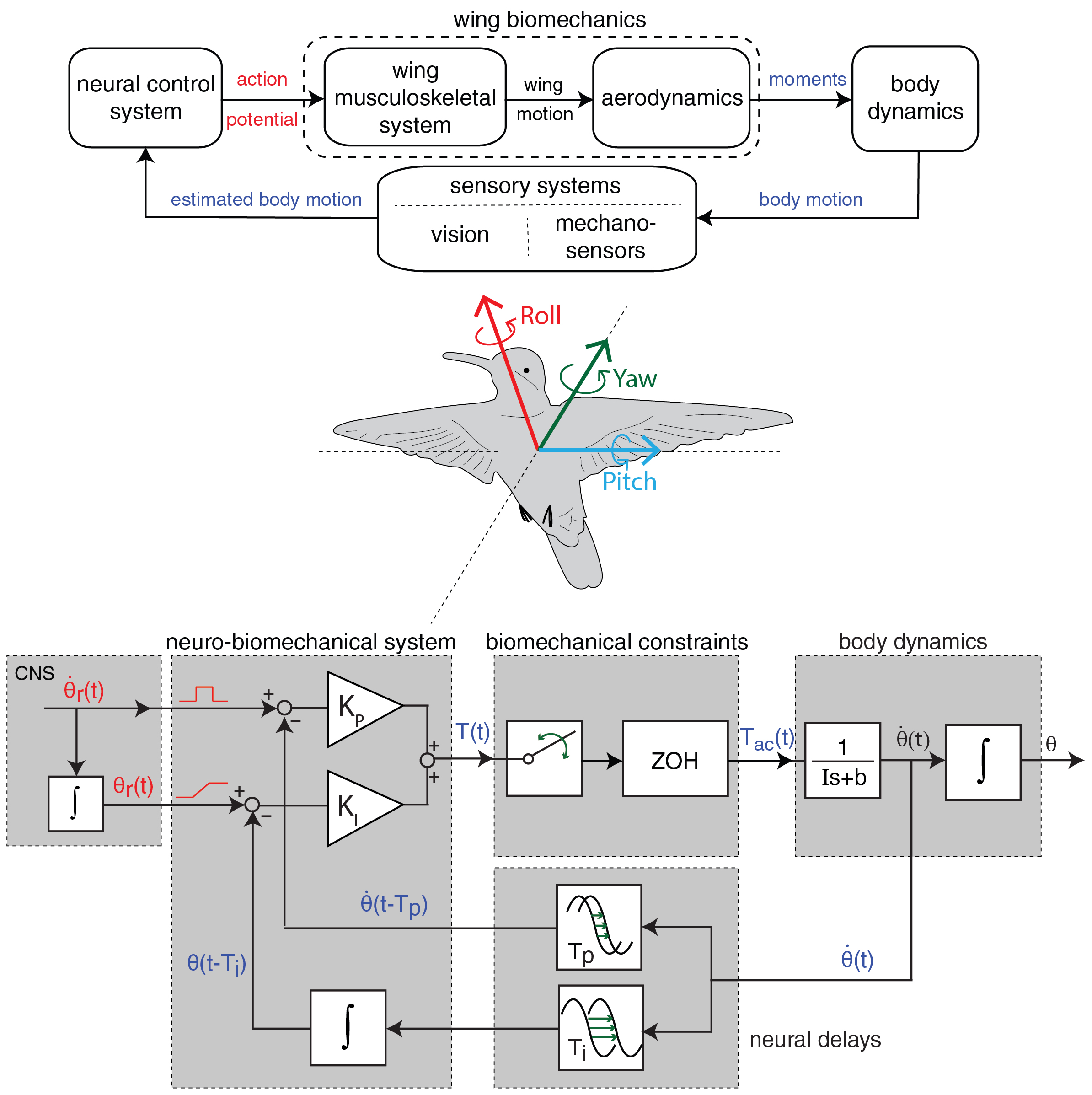

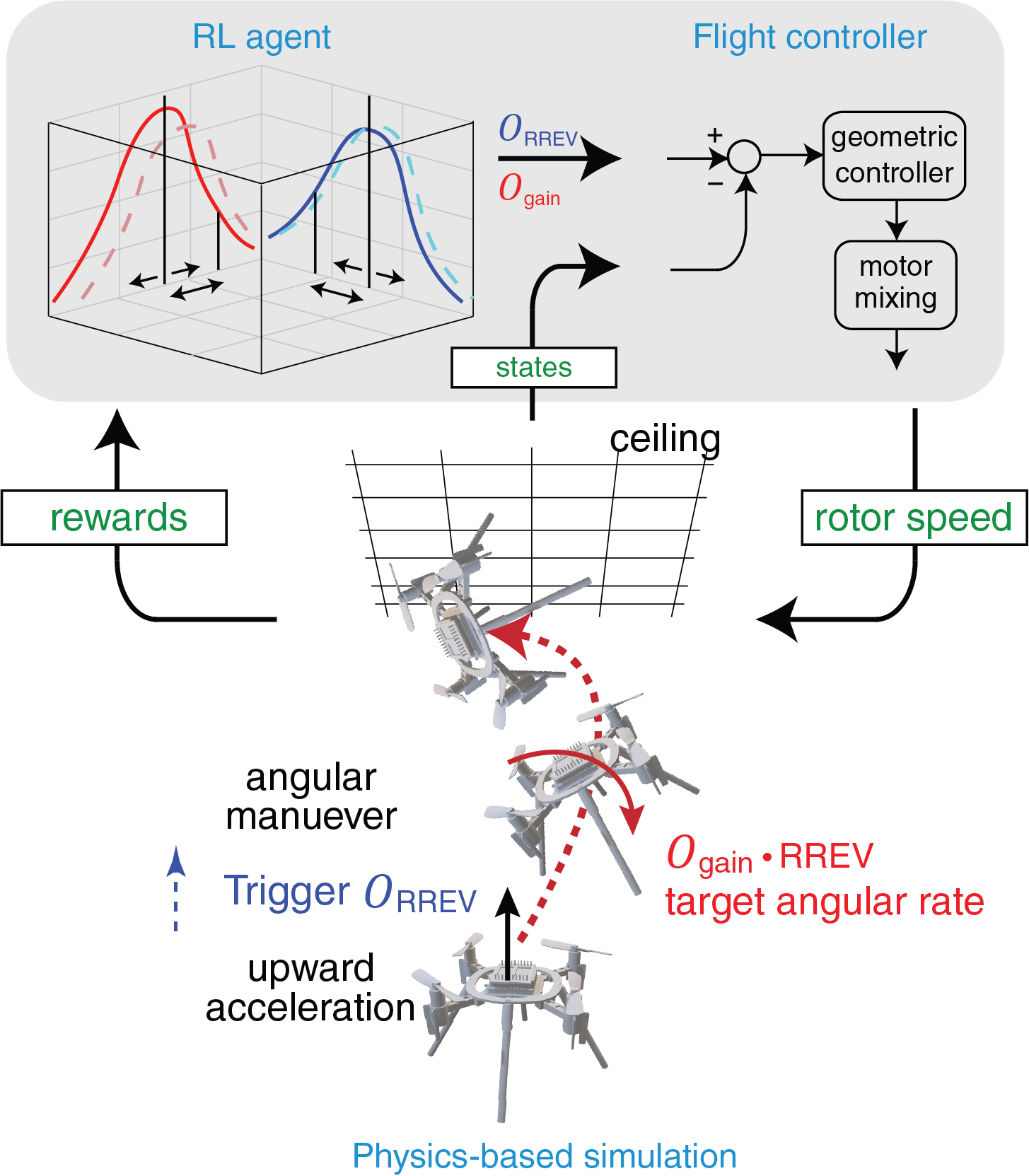

Keywords: policy gradient, vision-guided inverted landing, nonlinear geometric control, quadrotor, physics-based simulation

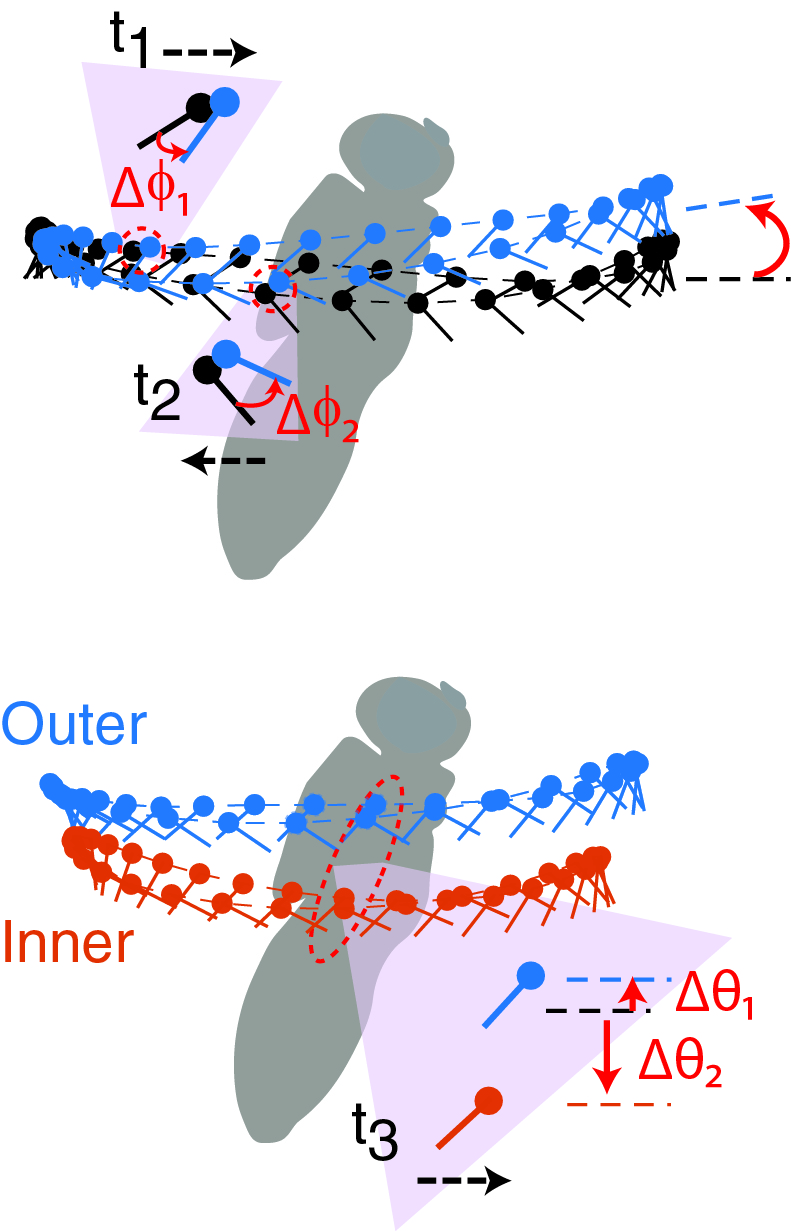

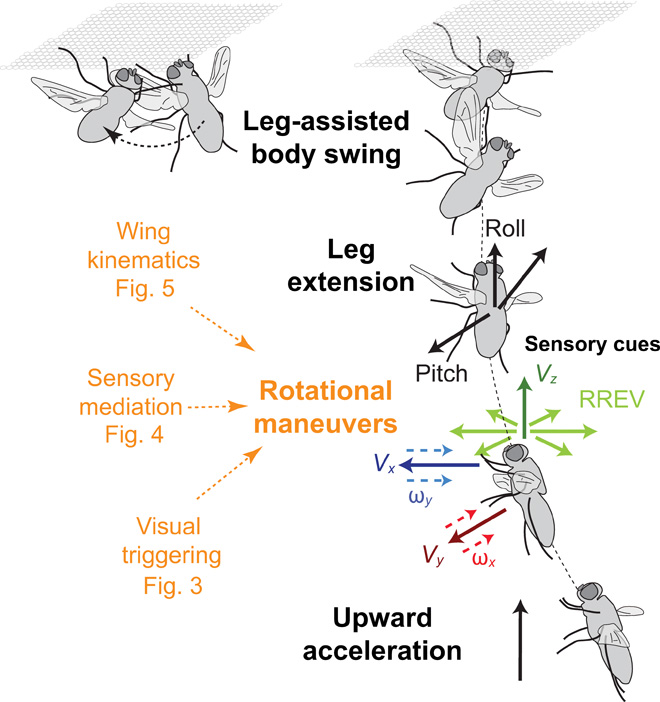

Keywords: optical flow, inverted landing, flapping wing aerodynamics, aggressive maneuvers

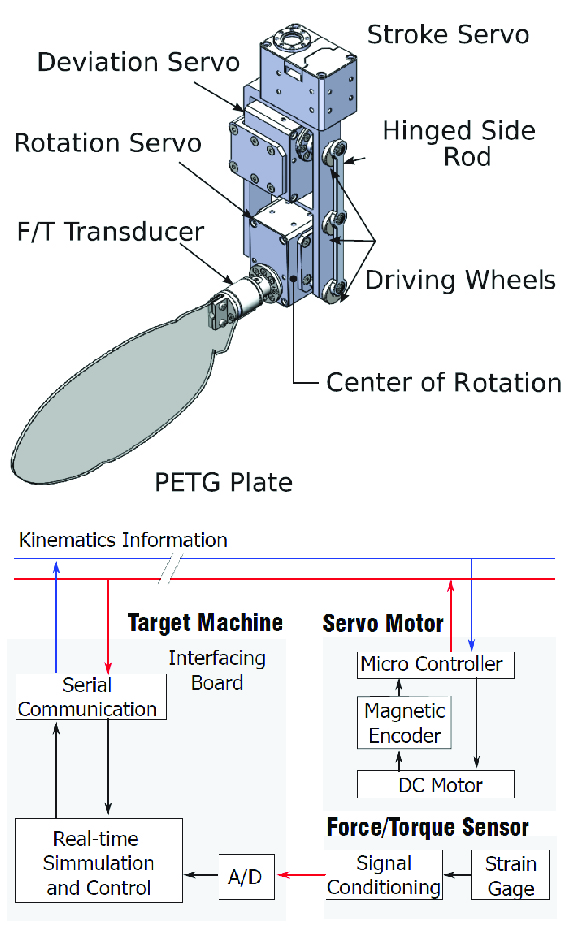

Keywords: policy search, real-time learning, flapping wing robot, dynamically scale